Use Cases

Explore real-world applications of our solution. See how businesses across industries leverage our technology to solve complex challenges. From startups to enterprises, find out how we can transform your operations.

Certification of Neural Networks

In safety-critical systems, software certification and verification are essential processes to ensure that all components meet strict safety and performance standards. This is particularly crucial in AI and Neural Network Systems, where the methods used need to undergo formal evaluation to ensure their reliability and robustness. For these systems, a key aspect of certification involves extensive verification to confirm that the AI operates safely and effectively in these high-stakes environments.

AI Certification in Industrial Applications

Certification is a critical process in ensuring that AI and machine learning systems follow established standards and regulations. In industrial applications where AI controls physical systems and interacts with human operators, conducting proper assessment of AI systems is crucial to maintain the level of safety, performance, and reliability of these systems. Certification not only demonstrates compliance with industry norms but also builds trust among stakeholders; including customers, partners, and regulators.

Key advantages of certifying AI systems

- Increased Safety: Ensures that AI systems meet regulatory requirements, reduces the risk of errors in critical applications.

- Legal Compliance: Helps businesses comply with EU AI Act and international regulations, avoid legal issues.

- Enhanced Operational Efficiency: Improves the performance and reliability, leading to more efficient operations.

- Innovation & Competitiveness: Drives technological advancement, allows broader market access and attracting partners.

ISO/IEC 24029 is a key certification standard specifically focused on the assessment of AI systems. It provides guidelines for evaluating the robustness, accuracy, and transparency of AI technologies, which are increasingly being integrated into industrial applications. Obtaining ISO 24029 certification is essential for companies that want to ensure their AI systems are safe, reliable, and capable of performing as intended under various conditions. It also provides a competitive advantage by enabling broader market access, attracting more business partners, and increasing revenue opportunities.

AI Certification Simplified by Datenvorsprung

At Datenvorsprung, we understand the importance of achieving ISO/IEC 24029 certification for AI-driven industrial applications. In partnership with certification authorities, we provide comprehensive safety and robustness tests that are integral to the certification process. Our software tool provides formal & statistical verification of ML algorithms, ensuring they meet strict standards required by ISO/IEC 24029. Additionally, we offer ongoing assessment services to ensure that AI systems remain compliant as they evolve and as new threats or challenges arise. By integrating our tool into the certification process, companies can reduce time and costs, and maintain the trust of their stakeholders through continuous evaluation and adherence to industry standards. Our collaboration with industry experts ensures that AI technologies are safe, reliable, and ready for the market, by giving them competitive advantage and market access.

Verification of Cyber-Physical Systems

In safety-critical cyber-physical systems, software certification and verification are essential processes to ensure that all components meet strict safety and performance standards. This is particularly important in AI-controlled systems. Our tool TraceTube (patent pending) will provide important verification of such systems. TraceTube analyzes systems based on test data only, without the need of knowing the model itself. No transmission of your trained AI model is needed.

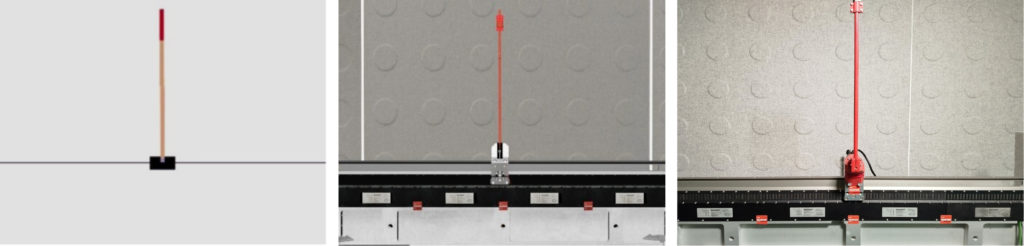

Verification of an AI-controlled Cartpole System

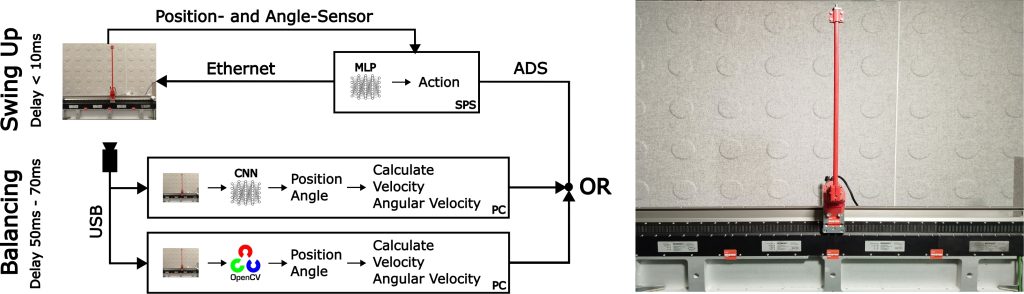

The system consists of a movable, magnetically driven cart on an XTS transport rail from Beckhoff GmbH. A free-swinging pole is mounted on the cart. Both the cart’s position and the pole’s angle are measured by sensors. The following sequence is to be executed:

- Swingup of the pole using a sensor-based (position and pole angle) swingup-model.

- Balancing the pole using the sensor-based swingup-model. This step will be done for a pre-defined number of time-steps.

- Balancing the pole using an image-based balancing model (image processing can either be done via OpenCV or a CNN).

Unsafe States In The Cartpole System

During the swing up of this cart-pole system hard acceleration of the cart in both directions is needed. To ensure safety for both hardware and bystanders it is critical to test the systems safety. The term ”safety” implies that the carts position will not exceed the rails physical boundaries at any moment in time during this swing up procedure.

Because of the large delay due to the image processing when controlling the cart for balancing when using the video as input a different controller is used compared to the sensor based balancing controller. This different behaviour of both controllers and the resulting hard transition between angle- and video-based balancing also requires TraceTube to do an analysis of the safety of the system. In this case the term ”safety” not only implies the cart not going out of bounds but also the pendulum staying in the upright position during this transitioning phase.

Verification Utilizing TraceTube

TraceTube was used to determine the safety of three different cases.

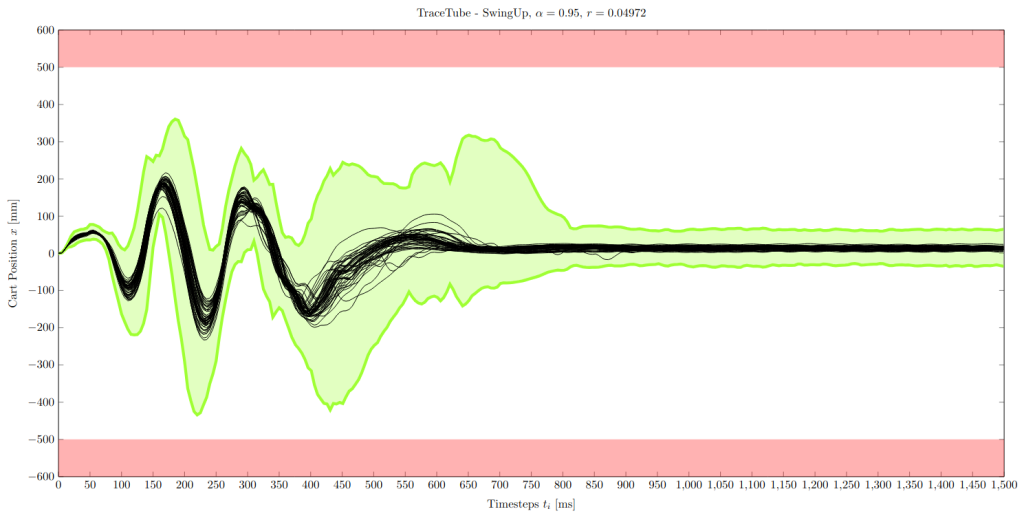

1) Testing the safety of the swingup

A safe swingup is defined by the cart not exceeding the tracks physical limits of [−500mm, 500mm]. The following plot shows the tube for the carts position (output of TraceTube). The constructed tube will be correct with a standard confidence of α = 95%. The risk (the maximum percentage of traces leaving the tube at some time-step) is calculated at pr = 4.972%. As the tube does not reach any unsafe state the same values can be used for the safety argument.

With a confidence of α = 95% the TraceTube algorithm can verify the constructed tube and (due to no intersections of the tube with unsafe states at any time-step) the systems safety during this phase with a risk of pr = 4, 972%.

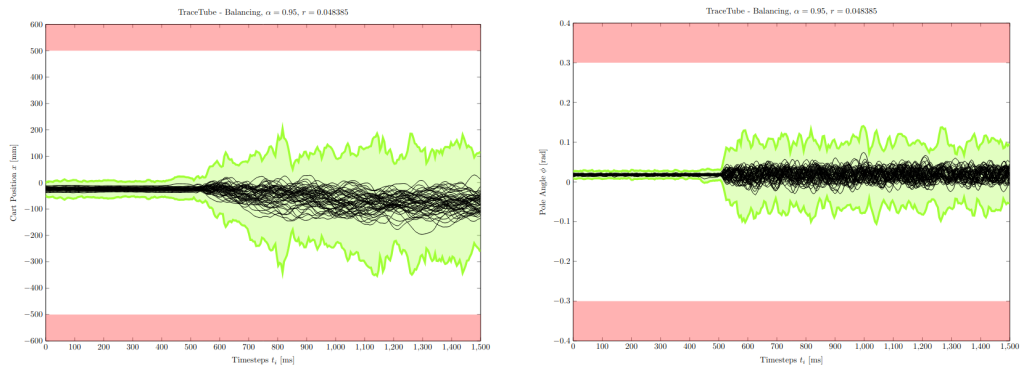

2) Testing the safety of the balancing when switching from the sensor-based swingup-model to the image-based balancing OpenCV-model.

A safe balancing includes

1. the cart not cart not exceeding the tracks physical limits of [−500mm, 500mm], and

2. the pole remaining in an upright position [−0.3rad, 0.3rad] for every time-step.

Both constraints were checked in one TraceTube run together. The resulting reach-tubes for both the cart’s position and the pole’s angle can be seen in the following graph.

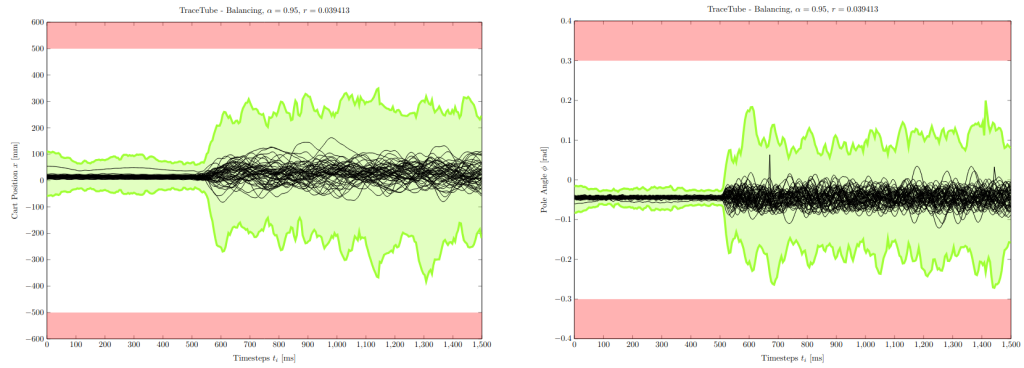

3) Ensuring the safety of the balancing when switching from the sensor-based swingup-model to the image-based balancing CNN-model.

AI Controllers in Automation

Industrial automation is revolutionizing the manufacturing sector by efficiently managing complex and dynamic processes. With the rise of Industry 4.0 technologies, ensuring the safety and reliability of these systems has become a critical concern. Since AI often uses black-box models, where the system’s output is unpredictable, there is a growing need to formally evaluate and verify these systems. By designing safe AI controllers and integrating verification tools into the cyber-physical systems, companies can optimize processes and ensure the safety of their systems.

AI-Driven Industrial Transformation

AI controllers play a big role in industrial transformation, they are responsible for making real-time decisions that directly impact the operation of machinery or entire production lines. Safety is crucial in such environments where black-box machine learning models are used. Any malfunction or incorrect decision by an AI controller can lead to significant safety hazards, such as equipment failure, production downtime, or even harm to human operators.

For example an AI-driven robotic arm in a manufacturing plant that works alongside human operators needs to execute material handling tasks precisely. If the AI controller guiding the robot makes an incorrect decision, it could result in the robot causing injuries or production losses. Therefore the complexity of these systems requires comprehensive assessment to ensure that they operate safely and effectively under all conditions.

- Process Optimization: Reduces material waste and energy consumption through precise real-time control.

- Adaptability: Supports quick retraining and reconfiguration to handle different production sizes and requirements.

- Compatibility: Integrates various machine spare parts, suits different qualities and performance specifications.

Strategic Partnership for AI-Driven Industrial Automation

At Datenvorsprung, we have developed a specialized tool that enhances safety and operational excellence in industrial automation. Our tool is designed to statistically verify the behavior of AI controllers, adhering to previously defined safe operation zones for ML-driven robots. This verification process can be integrated directly into the model development and testing pipeline, allowing to monitor the system behavior as new data is introduced. By using our tool, companies can confidently deploy AI-driven automation solutions and significantly reduce the risk of unintended behavior in AI-driven systems, such as industrial controllers. Ultimately, our technology provides companies with the robustness, reliability, and efficiency needed to maintain competitive skills.

Datenvorsprung offers services for industrial automation.

- Safe AI Controller Development: We design customized AI controllers for industrial applications to perform flexible tasks, ensuring they are robust, adaptable, and capable of performing safely in a wide range of operational scenarios.

- AI Verification System: Using our in-house verification tool (patent pending), we provide thorough statistical analysis and validation of AI controllers, to provide guarantees that machinery performs as intended.

Partnership With Industry Leaders

In partnership with Beckhoff Automation GmbH, Datenvorsprung developed a use case to leverage the expertise of both companies in creating a robust, AI-driven control system. This collaboration brought together Beckhoff’s advanced hardware capabilities and Datenvorsprung’s cutting-edge AI software solutions to manage and verify machine learning-driven robots in industrial environments.

In this collaboration, Datenvorsprung developed an AI controller for the challenging pendulum swing-up task used in the Beckhoff hardware. After designing the AI, we used our in-house verification tool to verify the system’s safety and robustness, maintaining stability. This integrated setup allowed for the simulation and verification of a classic control problem, such as the cart-pole system, and also set an example for applicability of our tool in industrial automation.

Component Robustness

In modern industrial systems, sensors play a vital role in data collection and decision-making. However, relying on multiple sensors can become costly and problematic, especially in dynamic environments. For AI-driven applications, the data input to machine learning models must remain consistent and dependable. Ensuring system robustness to work effectively with fewer, more resilient sensors reduces equipment redundancy and minimizes costs. The ability to use alternative data sources such as cameras when necessary gives flexibility and operational efficiency to companies, lowering the risk of system failure.

System Robustness Against Fluctuating Component Properties

In industrial automation, sensors are exposed to a wide range of challenges, including temperature fluctuations, electromagnetic interference, mechanical vibrations, and potential physical obstructions. The ability of a sensor to function optimally in these conditions without degradation in performance is a testament to its robustness. Additionally, the accurate transmission of sensor data is imperative for the effective training and real-time deployment of machine learning (ML) models. Any deviation or noise in the sensor data can lead to suboptimal decision-making by the AI system, which in turn could compromise the operational integrity of the entire system.

Our Solution

At Datenvorsprung, we focus on system robustness by providing advanced tools for analyzing how AI systems perform with different sensor characteristics, such as varying quality, price, or manufacturer. Our technology ensures that your AI-driven system remains consistent and reliable, regardless of the sensors used, without the need for individual testing under all conditions. Additionally, our tool allows smooth integration of alternative data sources, such as camera inputs, to take over when sensors fail or perform poorly. This adaptive capability minimizes equipment redundancy, reduces operational costs, and enhances overall efficiency. By embedding these solutions into system design, companies can achieve greater operational flexibility and long-term cost savings, ensuring their AI systems perform reliably in real-world environments.

Key Benefits of Robustness Analysis Solutions by Datenvorsprung

- Performance Consistency Across Component Variants: Anticipating system behavior to maintain optimal performance across different components, regardless of manufacturer, type, or price range.

- Error Handling & Failover: Allowing smooth integration of alternative data sources, such as camera inputs, to take over when sensors fail or perform poorly in AI systems, reducing operational costs and increasing efficiency.

- Ensuring Reliability of AI Systems: Ensuring desired system behavior under varying environment conditions to sustain long-term robustness and safety.

By implementing our tools and AI methodologies, our partners can guarantee system robustness, which is essential for the reliable operation of AI-driven automation processes. They can be more flexible in operations and minimize equipment redundancy, reduce operational costs, and increase overall efficiency.

Simulation to Reality (Sim2Real Gap)

In many industrial applications, simulators play a vital role in developing and testing machine learning (ML) models. However, a significant challenge arises when these models, trained and validated in simulated environments, fail to perform reliably in real-world scenarios. This challenge, often referred to as the “reality gap,” is one of the most critical obstacles in deploying ML models for industrial use. The reality gap stems from several factors, including issues with system identification, incomplete or simplified modeling, and inherent hardware limitations. Bridging this gap is critical for ensuring that ML models operate safely and effectively in real-world industrial scenarios.

Our Solution For The Sim2Real Gap

By performing interval analysis on the system’s operational parameters, we can determine the boundaries in which the system will operate reliably. If the system stays within broad boundaries during simulation, we can have a more confident idea about the performance in the real world. Our technology provides two key insights: a clear yes-or-no answer on whether the simulated system will work in real-world conditions, also an understanding of the parameter ranges within which the system can operate safely and effectively.

An example of the Sim2Real gap can be seen in a robot arm controlled by a deep learning model and tested in simulation. While the robot might perform well in a controlled simulation, its performance could decrease in a real-world manufacturing environment with variable conditions, potentially causing errors or safety risks. Bridging this gap is crucial for ensuring that ML models can operate safely and effectively when deployed outside of the simulation, where the environment is far less predictable. Addressing simulation gap can be challenging and might be encountered in various industries such as automotive, aerospace and healthcare.

Benefits of Our Technology

Our technology addresses the Sim2Real gap by providing formal statistical verification of AI models, ensuring that their performance in simulation is accurately reflected in real-world applications. At Datenvorsprung, we help ensure the robustness of AI systems, focusing on how well a model’s output remains consistent when exposed to new environmental conditions. Our tool is specifically designed to ensure that AI models’ performance does not deviate significantly from the expected, when transitioning from simulation to reality.

- Enhanced System Identification: Improved accuracy in identifying system dynamics within simulations, leading to more realistic models.

- Improved Modeling Completeness: Advanced techniques that account for a broader range of variables so that proper functioning is assured in reality. This minimizes discrepancies between simulated and real environments.

- Controller Assessment: Robustness testing for controllers in simulations to predict and optimize real-world performance.

Datenvorsprung helps to narrow the Sim2Real gap, enabling safer and more effective deployment of AI-driven solutions in industrial environments.

Autonomous Driving

In autonomous driving systems, ensuring both safety and robust performance is critical due to the complex and unpredictable nature of real-world environments. Autonomous vehicles must navigate a variety of conditions, from different traffic patterns to various weather and lighting scenarios, while adhering to regulatory standards and providing a reliable and comfortable user experience. Achieving these objectives requires verification of AI Systems controlling these vehicles to maintain consistent safety and performance.

Challenges in Autonomous Driving Systems

Our In-House Technology

Datenvorsprung addresses the challenges in autonomous driving systems by providing formal verification and analysis tools for the AI algorithms that control these vehicles. Our tool ensures that the AI operates within predefined safe bounds across various scenarios, such as different road and weather conditions. By proactively identifying potential safety risks and ensuring compliance with industry standards, our tool helps prevent accidents and builds trust among users and regulators. By integrating our tool into the development and testing phases, automotive companies can accelerate the certification and deployment of their autonomous vehicles, ensuring they are ready for real-world challenges and meet the highest safety standards.

- Verification of Prediction & Control Systems: Our tool evaluates the behavior of prediction algorithms and control systems within autonomous vehicles. It ensures that the vehicle’s decision-making processes are verified in real-world scenarios.

- Safe Operational Boundaries: By establishing confidence intervals and safety margins, our tool assesses if ML models operate in the pre-defined operational bounds, helping to deal with the uncertainties in the black-box ML models, ensuring safe vehicle operation.

- Stability in Different Conditions: Our solution can be used over as new data is obtained or as the ML model is updated. This repetitive evaluation helps maintain the reliability of the models.

- Adaptation to Varied Conditions: The tool helps tracking the vehicle’s ability to adapt to a range of environment conditions such as road and weather. This adaptability is crucial for maintaining effective performance.

Case Study: Validating an Autonomous Driving Model Under Varying Lighting Conditions

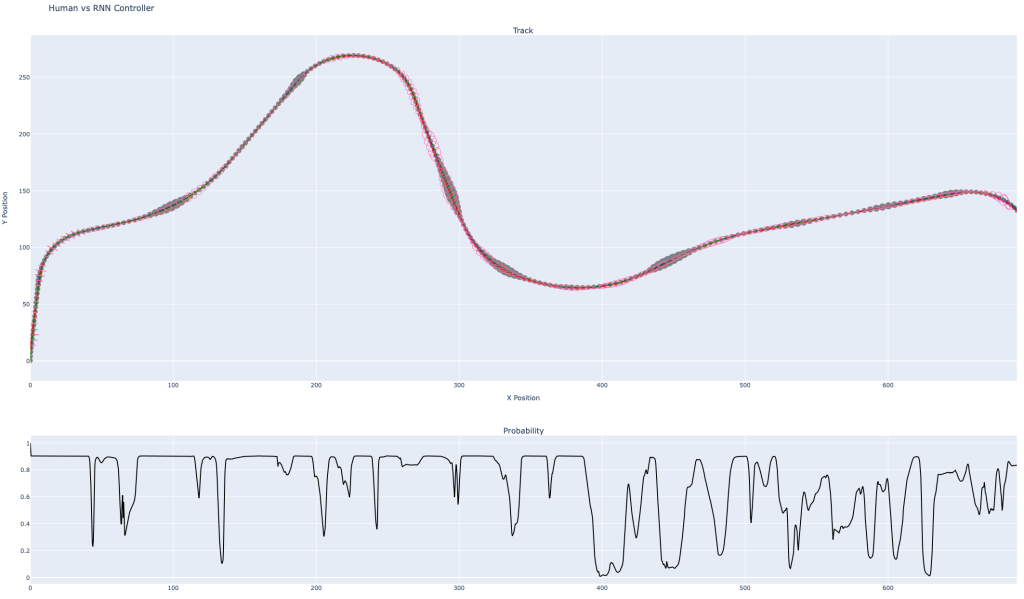

In this case study, we aimed to compare the performance of an autonomous driving system with that of an expert human driver under different lighting conditions. The expert driver completed several laps on a race track, and their driving path was recorded as the optimal trajectory. This expert path served as the reference for evaluating the safety and accuracy of the autonomous Recurrent Neural Network (RNN) model, which was trained to navigate the same track.

The evaluation focused on two key safety criteria:

- The RNN’s trajectory should remain close to the expert’s path.

- The RNN must stay on the road at all times.

Simulating Varying Lighting Conditions

One challenge in autonomous driving is how well systems handle different lighting scenarios. To test this, we simulated various lighting conditions by adjusting the brightness of the images fed to the RNN model, from extremely bright to very dark. The goal was to see if the RNN could still meet the safety criteria under these varied light levels.

Results from Formal Verification

Our formal verification process was applied to determine the safe operational bounds of the RNN model under the different lighting conditions. The analysis identified a range of brightness values where the RNN continued to perform reliably and safely, without straying from the road or deviating too much from the expert’s trajectory.

Bird’s-eye view of the racetrack comparing the trajectories of the human driver and the autonomous RNN model. The red circles represent the bounds for the RNN’s state under varying lighting conditions. The grey blobs indicate the maximum deviation between the RNN’s path and the human driver’s, the colored traces show the different RNN trajectories.

While the model performed well within this defined range, it failed to maintain proper control under extreme lighting conditions. A safe range of brightness values was established, providing information that could hypothetically be used to refine or adjust the system’s deployment parameters.

This case study illustrates how formal verification can be applied to evaluate and improve the performance of autonomous driving models in real-world scenarios.